Event Driven Mircroservices Architecture with Workflow

Event Driven Microservices Architecture with Orchestrator

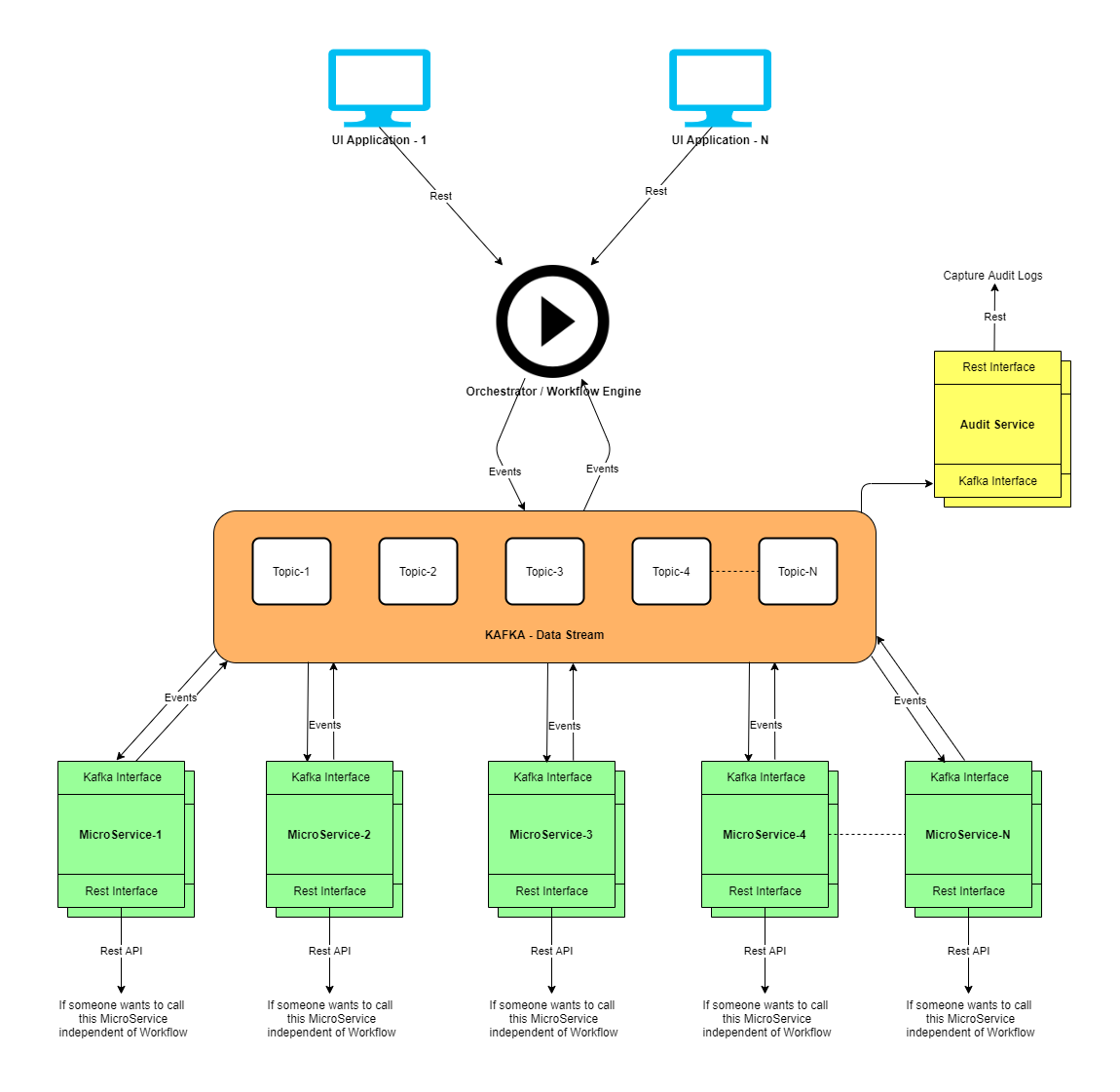

This article captures my experience building Enterprise Platform that was based on Event Driven Microservices Architecture with an Orchestrator / Workflow Engine.

Below are few high level components of this Platform :=>

UI Application :

- The UI applications were of both types - Web App and Mobile App. These were different applications for different business needs. The form factor was chosen based on the customer requirements.

- These UI applications were interacting with Backend services via REST calls.

Orchestrator / Workflow Engine :

- An Orchestrator is a Workflow Engine that drives a customer journey. A customer journey involved calling multiple Microservices in a sequence based on customer’s inputs.

- The Orchestrator had 2 interfaces : Rest and Kafka. Rest interface was exposed to the UI layer and the Kafka interface was exposed to Kafka Broker. The intent was that the UI layer can call this Workflow Engine via Rest API and internally this Workflow Engine would interact with various microservices via Kafka.

- The Workflow Engine was configured with different Business journeys that belonged to respective UI Applications. These different business journeys were using the same set of microservices but in a different sequence.

- A number of open-source Workflow Engines were explored such as JBPM, Activiti, Camuda, Zeebe, Netflix Conductor, Uber Cadence, AWS Step Function, Apache Airflow, etc.

Kafka Data Stream :

- Kafka was used as a messaging platform. We needed a Pub-Sub system as it suited our use case.

- Events - We identified different types of events that would flow through the system based on the Publishers and Subscribers. For us the Publishers and Subscribers were Workflow Engine and other Microservies. We defined a format for Events and also used Confluence Schema registry to make sure invalid events do not flow through the system. This helps to keep the ease of manageability.

- Topics - Events were grouped into logic bundles and then multiple Topics were created. Thereafter these Topics were assigned to respective Publishers and Subscribers in the system.

- Partitions : Topics were also divided into Partitions so that we could scale our platform under heavy loads.

- We used a tool named “Conductor” to monitor and play around with the Kafka instance. It's a very handy tool that connects with Kafka Broker and Zookeeper.

MicroServices :

- The system had more than 30 Microservices for different use cases. These Microservices performed complex operations, interacted with 3rd party APIs/Applications and persisted data in SQL / NoSQL databases.

- These Microservices could be used as part of a Workflow Journey or independently by an Internal or External client.

- The Microservices had 2 interfaces : Rest and Kafka. Rest interface was exposed if someone wanted to use this microservice in standalone mode. On the other hand, Kafka interface was implemented for interaction with Workflow, when the microservice was a part of a sequence based on customer journey. Kafka interface was also used since few microservices had to talk to each other as well.

- Intelligent Retry logic was implemented in Microservice for Kafka interface. This meant that if the DB was down or the 3rd party service was not responding, the microservice will keep retrying the same message from Kafka and would not commit-acknowledge it. The moment DB or 3rd party service came back up, the microservice would process the current message and start consuming the queued up message in the topic. This brought resiliency into the system as 3rd party services had performance and stability issues.

- Multiple instances of the same Microservice were launched to scale the system under heavy load. Kafka Topic partitioning was used for the same. The formula we followed was that the maximum number of instances would be no greater than the number of Partitions in the Topic. Also, all the instances of a Microservice belonged to the same consumer group.

Audit Service :

- An auditing system was designed to capture events/logs flowing in the system.

- The Auditing log service was too exposed both via Rest and Kafka interface.

- Using this Auditing system we could recreate a Customer’s journey in our platform at any point of time and it too helped in RCA of issues.

- We were also able to gauge the Load and Performance of microservices using this smart Auditing service.

Author : Mayank Garg, Technology Enthusiast and Georgia Tech Alumni

(https://in.linkedin.com/in/mayankgarg12)

Comments

Post a Comment